Zero Trust

Zero trust is an approach to security that focuses on minimizing trust assumptions and implementing strict access controls and authentication mechanisms throughout an organization’s network and data environment. It provides a collection of concepts and ideas designed to minimize uncertainty and enforce accurate, least privilege, and per-request access decisions in information systems and services in the face of a network that is assumed to be compromised.

Zero trust moves defenses from static, network-based perimeters to focus on users, assets, and resources. It assumes there is no implicit trust granted to assets or user accounts based solely on their physical or network location (i.e., local area networks versus the internet) or based on asset ownership (enterprise or personally owned). Authentication and authorization (both subject and device) are discrete functions performed before a session to an enterprise resource is established.

In the current era where data flows between different clouds and datacenters, it is critical to apply security at the data layer by using encryption. Encryption makes security an attribute of the data, enabling granular access control regardless of where the data resides.

Confidential Computing is an advanced technology that effectively addresses the fallacy of trust between data and a host or virtual machine. At some point, data needs to be decrypted in a host’s memory to be processed by an application, at which it becomes vulnerable. Confidential Computing provides a hardware-based trusted execution environment to encrypt data in use, maintaining the confidentiality and integrity of the data even if the host is compromised and an attacker has the ability to run malicious processes or even scrape the memory.

The goal of zero trust for data

Zero trust addresses the following key objectives:

- Encryption

ZT promotes strong encryption techniques to protect data at rest, in transit, and in use. By encrypting data throughout its lifecycle, even if unauthorized individuals get access to the raw data, it is unusable without the corresponding decryption keys. - Segmentation

ZT encourages segregating data between smaller, isolated components. This strategy blocks unauthorized user movement and minimizes data exposure and breach risks (“blast radius”). - Continuous authentication

Specific mechanisms are employed to consistently verify the identity of users and devices throughout their interactions with the data. This approach validates that user access is aligned with the security policies. - Multi-Factor Authentication (MFA)

This approach mandates using multiple factors, such as passwords, biometrics, and physical tokens, for verification. ZT decreases the likelihood of compromise even if one factor is breached. MFA ensures that only authorized individuals are granted access to sensitive data. - Principle of least privilege

Strict access controls based on user identity, device health, and contextual factors are implemented to ensure that users and systems are provided the bare minimum level of access necessary to carry out their designated tasks.

Zero trust data security maturity model

The US Cybersecurity and Infrastructure Security Agency (CISA) published the Zero Trust Maturity Model (ZTMM), a framework to modernize an organization’s technology landscape during the current fast-paced changes. Although specifically tailored for US federal agencies, any organization would benefit from adopting the approaches outlined in this framework to lower their cybersecurity risk.

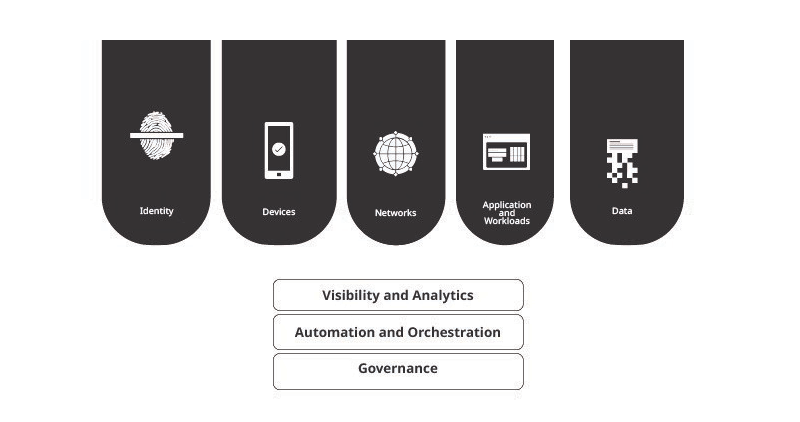

The ZTMM presents a progression of implementation across five distinct pillars, where gradual improvements can be made over time to attain optimization. These pillars, illustrated in Figure 1, encompass Identity, Devices, Networks, Applications and Workloads, and Data. Each pillar includes specific information about the following overarching capabilities: Visibility and Analytics, Automation and Orchestration, and Governance.

The ZTMM journey consists of three stages, starting from a Traditional point and progressing to Initial, Advanced, and Optimal stages, which facilitate the implementation of a Zero Trust Architecture (ZTA). Each subsequent stage requires higher levels of protection, detail, and complexity for successful adoption. This whitepaper focuses exclusively on the data pillar, and the functions enabling zero trust at the optimal stage for data security as per the ZTMM.

According to the ZTMM, organizations must protect their data on devices, apps, and networks as the government requires. They should keep track of their data, sort it into categories, and label it. They should also secure the data when it’s stored and when it’s being sent and have ways to detect and block unauthorized data transfers and exfiltration. Organizations should create and review data management policies to ensure all aspects of data security are followed throughout. Here are some data-related functions for a zero trust approach at the optimal stage, focusing on Visibility and Analytics, Automation and Orchestration, and Governance:

Data inventory management:

Continuously keep track of all relevant data within the organization and use effective strategies to prevent data loss by blocking suspicious data transfers.

Data categorization:

Automate the categorization and labelling of data throughout the company using strong techniques, clear formats, and methods covering all data types.

Data availability:

Use dynamic methods to optimize data availability based on the needs of users and entities, including access to historical data.

Data access:

Automate dynamic just-in-time and just-enough data access control and regularly review permissions.

Data encryption:

Encrypt data when it’s being used, followthe principle of least privilege for secure key management, and use up-to-date encryption standards and techniques whenever possible.

Visibility and analytics capability:

Have a clear view of the entire data lifecycle through robust analytics, including predictive analytics, to continuously assess the organization’s data and security.

Automation & orchestration capability:

Automate data lifecycles and security policies across the enterprise.

Governance capability:

Unify data lifecycle policies and dynamically enforce them across the enterprise.